People who have access to GPT 4 also can use DALL-E – and thus enjoy image creation based on a scene description via text and/or key words at a prompt. Multi-modal AI makes it possible. The thing is that such images are created on some server online – and OpenAI and others can gather information about what you are doing. Which has positive and negative aspects.

Last weekend I found out that a Linux PC with a present generation NVIDIA GPU is a good platform to work autonomously with pre-trained models. You “just” need some Gigabyte of hard disk space, a working installation of Python3, CUDA 12.X, Jupyterlab. And a GPU with more than 8 GB of VRAM. Plus some fantasy. And hours of fun are guaranteed …

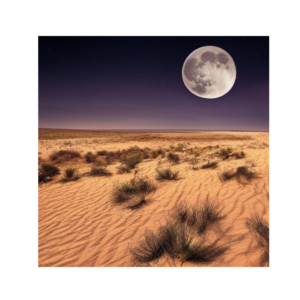

Although you may not get the quality of a GPT4/Dall-E combination, you can create photo realistic 512×512 images or digital paintings. See some basic examples done with the StableDiffusion (V1) model of the Stability AI group; their model has been integrated into Keras CV:

/

But so much more is possible! For examples see e.g. https://github.com/ Stability-AI/ stablediffusion.

I have given a very basic description of what you need to do in a post in another blog. See: “Prompt based image generation with Stable Diffusion on a TI 4060 ?!“

All this is possible due to the efforts of the Stability-AI group and the Keras CV team. I want to thank all these people for providing their code and the model weights to the public! I had a really enjoyable weekend with your tools – and I have not even tried out their StableDiffusionV2 model. So more fun is awaiting me …